Introduction

In our modern information age, organizations across various sectors – from legal firms to financial institutions and government agencies – face a common challenge: managing an overwhelming volume of documents that require meticulous examination. Legal contracts, medical records, financial reports, and regulatory filings often span hundreds of pages, demanding careful scrutiny to extract vital information, identify key clauses, and ensure compliance. This labor-intensive process not only consumes valuable time but also increases the risk of human error, potentially leading to missed opportunities, compliance issues, or flawed decision-making.

With the advancement of Artificial Intelligence (AI), particularly Large Language Models (LLMs), companies can now leverage this technology to efficiently navigate vast document repositories, enabling professionals to focus on high-value tasks such as client interaction and informed decision-making.

Problem Statement

Wagstaff Law Firm, specializing in cases involving defective medical devices and herbicides, faced the challenge of sifting through extensive client questionnaires embedded in lengthy PDFs. They needed to quickly identify clients meeting specific criteria, such as those who used a defective herbicide and were eligible to file a claim. The firm approached BugendaiTech to streamline this process and alleviate the burden on their legal team.

Project Overview

BugendaiTech conceptualized and developed a Legal Document Chatbot using a Large Language Model (LLM) with a Retrieval Augmented Generation (RAG) approach. This solution allows users to converse with PDF documents in natural language, extracting relevant information efficiently. With questions like how many clients are using a particular product, how many clients are having a particular disease from a particular state etc. can be retrieved easily. This eases the burden on the law firm to contact all the clients under particular situations at once.

Key Components

- PDF Upload: Users can upload PDF documents from which they need to extract information.

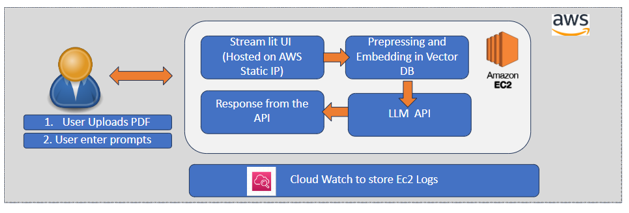

- User Interface: Built using the Streamlit framework, the website is hosted on AWS with a static IP and secure login credentials.

- Preprocessing: After upload, the PDF is parsed and analyzed; the algorithm detects the PDF type, chunks by structure, converts content to Q/A pairs, and applies metadata tagging for relevance.

- Embedding & Vector DB: Using the “sentence-transformers/all-mpnet-base-v2” model, chunks are embedded and stored in a FAISS vector database.

- Retrieval: Relevant parts are retrieved dynamically (variable number of chunks) and sent to the LLM to improve answer quality.

- LLM Response: The LLM receives the question + retrieved chunks + custom prompt. Primary LLM: Claude 2.1 (options for others as well).

- AWS Services: Components hosted on Amazon EC2; open-source models hosted on AWS Sagemaker.

CSV/SQL Agent Enhancement

To address limitations in RAG—especially queries needing whole-document context—BugendaiTech implemented a CSV/SQL Agent. The entire PDF is converted into a dataframe with questions as columns and answers as records. Using function-calling, users can ask complex questions like “How many clients meet these particular conditions?”—capabilities beyond standard RAG.

Benefits & Achievements

- Reduced Retrieval Time: Significant time savings vs manual search.

- Cost Savings: Open-source embeddings eliminate API costs without performance loss.

- Large PDF Handling: Robust preprocessing for PDFs up to ~200 pages.

- Chat History: Users can review prior Q&A.

- Multi-LLM Support: Choose among GPT-4, Llama2, and others.

- Enhanced Security: Option to use open-source LLMs on Amazon Bedrock to reduce data-leak risks while keeping choice of closed/open models.

Conclusion

The RAG Legal Document Chatbot—enhanced with a CSV/SQL agent—shows how Generative AI can transform time-consuming processes. Combining BugendaiTech’s AI expertise with Wagstaff Law Firm’s innovation produced an app that reduces cognitive load and lets legal teams focus on high-value work, improving efficiency and decisions across document-heavy workflows.

LLM